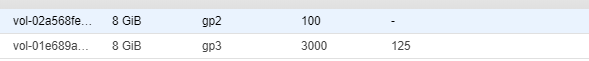

볼륨 두개를 만들었다

mount /dev/nvme1n1p1 /mnt/gp3 mount /dev/nvme2n1p1 /mnt/gp2 yum install gcc zlib-devel wget https://codeload.github.com/axboe/fio/tar.gz/fio-3.24 tar zfxv fio-3.24 cd fio-fio-3.24/ ./configure --prefix=/home/fio make; make install

필요한 라이브러리 gcc, zlib-devel 설치후 컴파일.

fio 는 나도 처음써보는 툴이다

fio --directory=/mnt/gp3 --name fio_test_file --direct=1 --rw=randread \ --bs=4K --size=1G --numjobs=7 --time_based --runtime=180 --group_reporting \ --norandommap

3분동안 하나의 스레드가 7개의 1G 파일을 4K 단위로 Direct I/O 모드의 Random Read 로 읽는 테스트이다.

Jobs: 7 (f=7): [r(7)][100.0%][r=11.7MiB/s][r=3001 IOPS][eta 00m:00s]

fio_test_file: (groupid=0, jobs=7): err= 0: pid=2450: Wed Dec 2 06:59:19 2020

read: IOPS=3016, BW=11.8MiB/s (12.4MB/s)(2121MiB/180004msec)

clat (usec): min=188, max=296635, avg=2319.05, stdev=1213.65

lat (usec): min=188, max=296635, avg=2319.21, stdev=1213.65

clat percentiles (usec):

| 1.00th=[ 408], 5.00th=[ 922], 10.00th=[ 1287], 20.00th=[ 1598],

| 30.00th=[ 1762], 40.00th=[ 1926], 50.00th=[ 2057], 60.00th=[ 2212],

| 70.00th=[ 2474], 80.00th=[ 2933], 90.00th=[ 3818], 95.00th=[ 4621],

| 99.00th=[ 6194], 99.50th=[ 6587], 99.90th=[ 7767], 99.95th=[ 8455],

| 99.99th=[10028]

bw ( KiB/s): min= 9848, max=32328, per=100.00%, avg=12069.08, stdev=167.76, samples=2513

iops : min= 2462, max= 8082, avg=3017.27, stdev=41.94, samples=2513

lat (usec) : 250=0.05%, 500=2.12%, 750=1.59%, 1000=1.99%

lat (msec) : 2=40.61%, 4=45.04%, 10=8.59%, 20=0.01%, 50=0.01%

lat (msec) : 250=0.01%, 500=0.01%

cpu : usr=0.12%, sys=0.29%, ctx=543082, majf=0, minf=93

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=542985,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

READ: bw=11.8MiB/s (12.4MB/s), 11.8MiB/s-11.8MiB/s (12.4MB/s-12.4MB/s), io=2121MiB (2224MB), run=180004-180004msec

Disk stats (read/write):

nvme1n1: ios=542478/13, merge=0/3, ticks=1253070/0, in_queue=1078350, util=99.97%정확히 3000iops 가 나온다.

그럼 로컬 디바이스 테스트 해볼까?

fio --directory=/mnt/gp2 --name fio_test_file --direct=1 --rw=randread \ --bs=4K --size=1G --numjobs=7 --time_based --runtime=180 --group_reporting \ --norandommap

fio-3.24

Starting 7 processes

Jobs: 7 (f=7): [r(7)][100.0%][r=11.7MiB/s][r=2997 IOPS][eta 00m:00s]

fio_test_file: (groupid=0, jobs=7): err= 0: pid=1316: Wed Dec 2 07:13:16 2020

read: IOPS=3016, BW=11.8MiB/s (12.4MB/s)(2121MiB/180004msec)

clat (usec): min=192, max=298525, avg=2318.95, stdev=1162.93

lat (usec): min=192, max=298525, avg=2319.12, stdev=1162.93

clat percentiles (usec):

| 1.00th=[ 457], 5.00th=[ 963], 10.00th=[ 1254], 20.00th=[ 1565],

| 30.00th=[ 1729], 40.00th=[ 1909], 50.00th=[ 2057], 60.00th=[ 2245],

| 70.00th=[ 2540], 80.00th=[ 3032], 90.00th=[ 3818], 95.00th=[ 4490],

| 99.00th=[ 5932], 99.50th=[ 6259], 99.90th=[ 6915], 99.95th=[ 7373],

| 99.99th=[ 8455]

bw ( KiB/s): min= 9808, max=26696, per=100.00%, avg=12069.37, stdev=141.33, samples=2513

iops : min= 2452, max= 6674, avg=3017.34, stdev=35.33, samples=2513

lat (usec) : 250=0.01%, 500=1.48%, 750=1.61%, 1000=2.48%

lat (msec) : 2=41.05%, 4=44.98%, 10=8.40%, 20=0.01%, 250=0.01%

lat (msec) : 500=0.01%

cpu : usr=0.12%, sys=0.30%, ctx=543092, majf=0, minf=90

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=543002,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

READ: bw=11.8MiB/s (12.4MB/s), 11.8MiB/s-11.8MiB/s (12.4MB/s-12.4MB/s), io=2121MiB (2224MB), run=180004-180004msec

Disk stats (read/write):

nvme2n1: ios=542683/0, merge=0/0, ticks=1253810/0, in_queue=1076380, util=99.74%엥 결과가 같다..왜지? 3000iops 로 고정된다 gp2인데..

gp3를 분리하고 테스트한다.

Jobs: 7 (f=7): [r(7)][75.6%][r=11.7MiB/s][r=3001 IOPS][eta 00m:44s]

그럼 gp3연결하고 iops 를 올리고 다시 gp3 에 테스트한다.

fio-3.24

Starting 7 processes

Jobs: 7 (f=7): [r(7)][100.0%][r=23.4MiB/s][r=6002 IOPS][eta 00m:00s]

fio_test_file: (groupid=0, jobs=7): err= 0: pid=1393: Wed Dec 2 07:29:50 2020

read: IOPS=6033, BW=23.6MiB/s (24.7MB/s)(4242MiB/180002msec)

clat (usec): min=146, max=327858, avg=1158.79, stdev=1152.61

lat (usec): min=146, max=327858, avg=1158.95, stdev=1152.62

clat percentiles (usec):

| 1.00th=[ 281], 5.00th=[ 371], 10.00th=[ 441], 20.00th=[ 586],

| 30.00th=[ 685], 40.00th=[ 766], 50.00th=[ 848], 60.00th=[ 947],

| 70.00th=[ 1090], 80.00th=[ 1434], 90.00th=[ 2343], 95.00th=[ 3294],

| 99.00th=[ 5014], 99.50th=[ 5342], 99.90th=[ 6456], 99.95th=[ 8455],

| 99.99th=[26608]

bw ( KiB/s): min=16360, max=37232, per=100.00%, avg=24140.01, stdev=241.45, samples=2513

iops : min= 4090, max= 9308, avg=6035.00, stdev=60.36, samples=2513

lat (usec) : 250=0.64%, 500=13.39%, 750=23.96%, 1000=26.32%

lat (msec) : 2=23.16%, 4=9.85%, 10=2.63%, 20=0.01%, 50=0.04%

lat (msec) : 100=0.01%, 250=0.01%, 500=0.01%

cpu : usr=0.24%, sys=0.53%, ctx=1086208, majf=0, minf=87

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=1085994,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

READ: bw=23.6MiB/s (24.7MB/s), 23.6MiB/s-23.6MiB/s (24.7MB/s-24.7MB/s), io=4242MiB (4448MB), run=180002-180002msec

Disk stats (read/write):

nvme2n1: ios=1085375/0, merge=0/0, ticks=1249280/0, in_queue=1077940, util=100.00%gp3의 iops 가 올라간건 확인이 된다.

정리하자면 gp2의 성능테스트시에 iops 가 3000으로 고정된다. 아마 대역폭기반 계산이라 정확하게 3000으로 측정되어 실제 디스크의 iops 가 아닌거 같다.

대역폭을 측정할수 있는 툴인가........툴을까봐야하는데 귀찮다..그건 나중에...-_-;

gp2 burst가 3000일거예요. 좀 오래 두시면 크레딧이 떨어져서 제속도가 나오지 않을까요?

그부분도 한번봐야겠네요~

burst 부분은 아직 못봤습니다.

https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ebs-volume-types.html

찾아봤는데 아직 burst 관련 docs 내용은 없고..좀더 기다려보면 업데이트 될거같습니다~

앗 요걸 말씀드린겁니다.

범용 SSD(gp2) 볼륨은 광범위한 작업에서 이상적으로 사용될 수 있는 비용 효과적인 스토리지를 제공합니다. 이러한 볼륨은 시간을 연장할 경우 3,000IOPS의 버스트 기능까지 지원되어 지연 시간이 한 자릿수 밀리초에 불과합니다.

gp3는 consistent baseline인거 같구요.

General Purpose SSD (gp3) volumes offer cost-effective storage that is ideal for a broad range of workloads. These volumes deliver a consistent baseline rate of 3,000 IOPS and 125 MiB/s, included with the price of storage. You can provision additional IOPS (up to 16,000) and throughput (up to 1,000 MiB/s) for an additional cost.

아아~궁금증이 풀렸네요!!감사합니다!!!!!!!!!!!!!